Hi everyone,

As promised in our last post we continue with the container blog post. This time we want to give you an overview on how our network stack evolved over the past year.

Networking is one of the hardest parts in modern distributed systems. Following microservice architectures, you will end up with a lot of atomic services which dynamically scale depending on the current load and still need to communicate with each other. It becomes even more challenging when CI/CD technics are involved and you end up with multiple versions of a service. Techniques like blue-green or canary releases all depend on proper network routing. In our use case, most of the service to service communication is done inside our Kubernetes clusters, which makes it a bit easier in some cases. Another more user facing requirement, is the ability to make services reachable from the outside of a cluster. For example, exposing a web frontend to customers. This kind of networking is called Ingress in the Kubernetes world.

Ingress Traffic in a Nutshell

When containers try to talk to each other inside a Kubernetes cluster it is mostly done with the help of a network plugin (like Flannel or Calico). Almost all of those plugins will create some kind of overlay network. Most of the time, this overlay is a virtual network with IP ranges which only exist in the overlay context. The surrounding infrastructure does not even know that this overlay exists. On the one hand this is pretty cool, since we can basically do whatever we want inside the virtual network. On the other hand, we have a problem when we need to communicate between the real and the virtual network. We need to find some solution for how to make a service available to the outside of the cluster.

There are some plugins (like Calico) which can also be used to make containers directly routable, which means you could treat them the same way as any target inside the network. This could be suitable for some use cases, but it could also be a security risk at some point. Imagine you have a very sensible database which should only be accessed from our application, because the application handles things like authentication and authorization. Protecting the DB with directly routable containers can be a challenge, especially if you also have the constraint to separate different environments, projects or teams. For that reason, we decided against directly routable containers and went with the overlay. The overlay brings an indirect layer of defense. If you can’t reach the container directly, you can’t attack it directly. Now let’s take a look at the different communication directions.

The outside case, when a container wants to talk to a target on the LAN or WAN (egress traffic) can be solved by using Network translation NAT. The container IP address will be hidden behind the physical address of each Kubernetes node. The default gateway, which is normally a switch, a router, or a firewall, will never see a container IP address. This is very important for some network environments as some of these gateway devices will have a policy to drop packages from sources they don’t know. Since the container overlay is a virtual network and is unknown to the gateway, all packages which are not NATed will be dropped. As you can imagine, this can have a massive impact on your applications.

The way more challenging direction is ingoing (ingress) traffic. In order to make our containerized services available to the outside, we need to make some adjustments to the surrounding network infrastructure. The bare minimum we need is an IP address which can be accessed. Thankfully Kubernetes helps us to make this task easier. The Service object offers two solutions:

- Type NodePort: If you set the type field to NodePort, the Kubernetes master(s) will allocate a port from a range specified by the –service-node-port-rangeflag (default 30000-32767). Each node will proxy that port (the same port number on every Node) into your Service. That port will be reported in your Service’s .spec.ports[*].NodePort field.

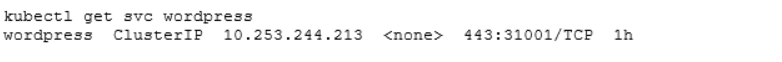

Let’s take a look at a simple Wordpress example service, where we want to expose port 443 to the outside:

By setting the Type to NodePort Kubernetes will automatically pick a free port from the specified range and expose it to the outside. In some situations, you will also want to set a static port. You can do that by adding nodePort in the ports section like:

For now we stick with the dynamic version. Let’s see what Kubernetes did with our service:

As you can see, Kubernetes assigned the port 31001 for exposing the service. The magic behind this is done by the kube-proxy. It is now listing on port 31001 on all Kubernetes nodes:

Kube-proxy will forward every request which is hitting the 31001 port to our Wordpress Pods.

- Type LoadBalancer: When using cloud providers which support external load balancers, setting the type field to LoadBalancer will provision a load balancer for your Service. The actual creation of the load balancer happens asynchronously, and information about the provisioned balancer will be published in the Service’s .status.loadbalancer field.

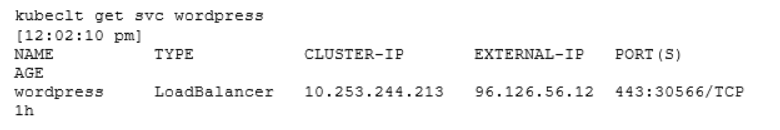

In our Wordpress example, it would look like this:

If your environment supports this, you will automatically get an IP address assigned. This IP address is owned by an external load balancer. It is not part of the Kubernetes cluster and normally managed by your infrastructure provider. You can view the stats of the service:

As you can see, the field EXTERNAL-IP is now set to 96.126.56.12. If you try to reach the service over the IP you will be load balanced to your service. In addition, you will notice that Kubernetes also assigned a node port to the service. This is needed because the external load balancer still needs to access the Pod(s) somehow. The difference is that you don’t need to expose the Kubernetes nodes directly to the public facing LAN / WAN.

These two methods can help to expose your apps, but there are still some problems left. Imagine you have many services which use different technologies and they all need to be exposed. Like a frontend service which provides a nice user interface, but also a backend service with a REST API. You will have to secure your exposed services. It should be obvious to use HTTPS with a proper certificate and implement some kind of authentication for your service. In some cases, you may also need to tweak some HTTP headers or implement some rewrite rules (for example to redirect all requests to the TLS encrypted port). These simple tasks can become very complex and hard to maintain because of the different technologies involved. In most cases you need to set and update them inside your app or in the container your app is running in. To make this task easier the Kubernetes developers introduced a new object called Ingress.

The Ingress Object

The Ingress object manages external access to the services in a cluster, typically HTTP(S). Ingress can provide load balancing, SSL termination and name-based virtual hosting. So instead of managing settings in different ways for each container or app, the Ingress will provide a common config schema for all of them.

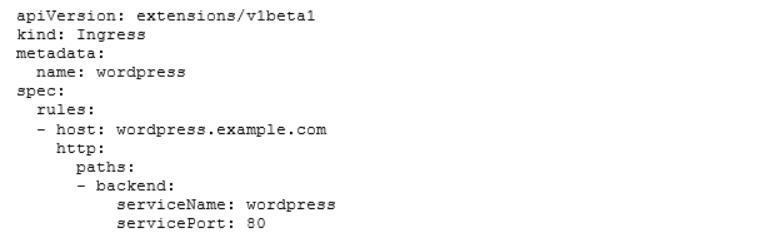

So how does it work? Let’s take a look at an example again:

So, we need to create an Ingress object and define the full qualified domain name on which the Ingress should listen. In addition, we need to tell the ingress to which backend the requests should be sent to. This way, we connect an Ingress with a Service (Code Style). An Ingress without any backing Service will not work at all.

Under the hood the Ingress is basically a reverse proxy. On most infrastructures and cloud providers you need to install a so-called Ingress controller. This is something like NGINX, Traefik or Envoy and a Kubernetes integration. The Ingress controller will connect to the Kubernetes API and will listen to events like create, delete, update, patch for objects of the kind Ingress. To create a proxy rule, the controller will also try to use the info about the Service which is connected to the Ingress. For each event, the controller will automatically create, update or delete proxy rules dynamically without any interruption for the users.

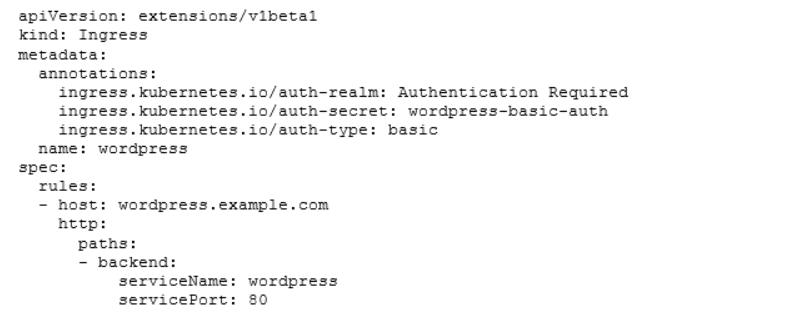

All controllers have a basic and common set of features. You can use them by adding annotations to your Ingress object. Let’s for example add basic authentication to our Wordpress service:

So we added three annotations. The auth-realm for example will add a field to the pop-up when you hit your Wordpress ULR. The second annotation auth-secret will tell the controller which Kubernetes secret to use for the credentials. The last annotation auth-type will set the type of authentication we want to use.

Beyond the basic feature set, each controller will come with specific features. For example, with the Traefik Ingress controller you can define a request redirect using regex by adding something like this:

Now that we explained the basics, we want to show you where we started and what problems and challenges we had with our first ingress traffic implementation.

The Half Dynamic Approach

We started in 2015 with the first iteration of our ingress solution. Back in the day, we only knew that we needed an automated and dynamic solution for HTTP(S) based services. Services which only operate on TCP level were out of scope for that first iteration.

Looking at the two methods for exposing a service, we could only use NodePort. There was no way to use type: LoadBalancer since there was no product / software which supported it for an on-premise Kubernetes installation.

We also needed to look for a proper Ingress controller which provides basic auth and adding custom headers. In 2015, there were only two implementations which seemed to be production ready: HA Proxy and Nginx. After a little proof of concept, we went with Nginx.

On the Kubernetes side we had all components ready. But we needed to find a solution for integrating with the on-premise network stack. We wanted to provide our users with a highly available single URL. In addition, we didn’t want to expose our Kubernetes nodes directly to the internal network. It was natural to use the same approach as the cloud providers and place a load balancer between our consumers and our Kubernetes nodes.

With the load balancer in place, we had a highly available IP address, which was great but not enough. Referencing IP addresses is error prone and not suitable for human interaction. For this requirement DNS is a perfect fit. We wanted to give our users the possibility to choose their own URLs. The consumers of the services also needed the ability to resolve these dynamically created URLs. Since all clients inside the P7S1 network talk to the corporate DNS, we needed to place the DNS records there. The only problem was, that getting a fully dynamic solution, would have been a huge amount of work. We would need to write our own Kubernetes controller which interacts with the DNS API. A good compromise was to use a DNS wildcard entry and a DNS subdomain.

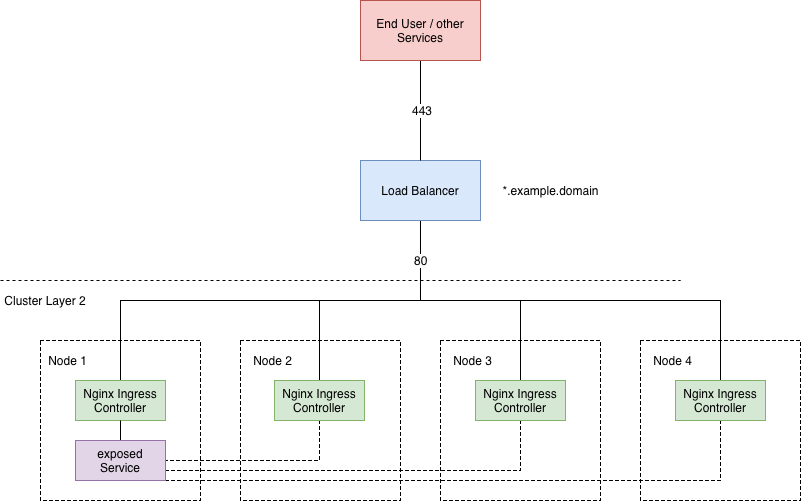

The whole setup looked like this:

Old ingress Stack

Old ingress Stack

Let’s talk a little bit more about the load balancer. We ended up with a high available hardware load balancer. We also tried some software load balancers on VMs, but it was quite difficult to implement a smooth failover between the active and the passive instance (This topic can potentially fill another blog post ;) so we will skip the details). We already had the load balancer in place to make the Kubernetes API highly available, so we thought: why not create a new virtual instance on it. So we assigned a single IP with an alias in our DNS subdomain example.domain.

To make the configuration easier, we installed the Ingress controller as a DaemonSet, which deploys exactly one Pod on each Kubernetes node. We exposed the internal port 443 as NodePort to port 30443. The load balancer will have all Kubernetes nodes in his configuration and will forward requests to port 30443.

Now the hacky part: the DNS wildcard entry. It just says *.example.domain will point to 10.221.134.120. Everything you would add as a prefix to our DNS subdomain will be pointed to that IP:

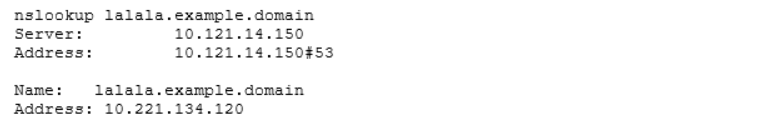

The service lalala in this example is something we chose totally at random. But you can see that the DNS answer for our DNS request is the VIP of our load balancer. This solution however can have some really bad downsides:

- You have to pay A LOT of attention when communicating with URLs which end with example.domain. If you misspell something in the prefix, you will also get an answer. Again, the DNS will resolve anything and will point to the load balancer. The load balancer will redirect to the Ingress controller and you will get a 404 return code.

- Adding a new subdomain is a manual task on many levels.

Our load balancer also has some additional functions. We will redirect all requests to port 443. With that, we enforce HTTPS. We don’t want unsecured communication between our consumers and the load balancer. Regarding the PKI we did a trick similar to the DNS wildcard entry: We created a wildcard certificate. This is located on the load balancer. All communication which the load balancer passes to the Ingress controller is unencrypted. We do SSL termination at that point. The cluster layer 2 network segment is secured by a firewall which we see as a trusted and closed zone. There is no real need for components or services inside that network to use encryption for communication.

When we look at a request, which a consumer sends, it will hit the load balancer and will be passed to the Ingress controller. The Ingress controller will take a look at the HTTP headers and will read out the Host field. Based on that information it will try find a matching proxy rule to redirect / forward the request to a Pod. It could be that the Ingress controller which processes the request is not located on the same Kubernetes node as the target Pod. Good for us: kube-proxy will handle this and will make sure the request will reach our target Pod. If no rule matches, the Ingress controller will send a 404 back to the client.

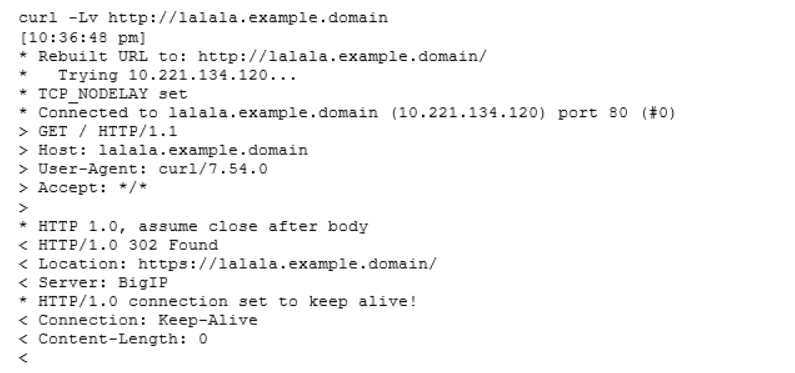

Let’s take a look at how the whole chain looks like when we request a service:

We first hit the load balancer and our client gets back a 302. Notice we called our service on port 80, so no encryption. But the load balancer redirects us and forces us to use port 443 and HTTPS over TLS.

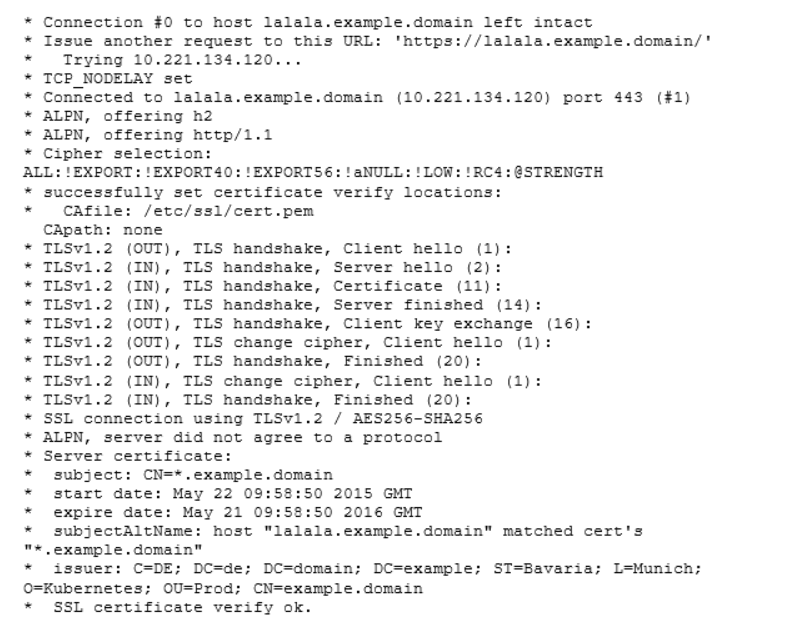

We see the TLS handshake and that our URL matches the subject for the wildcard cert. So the verification passes.

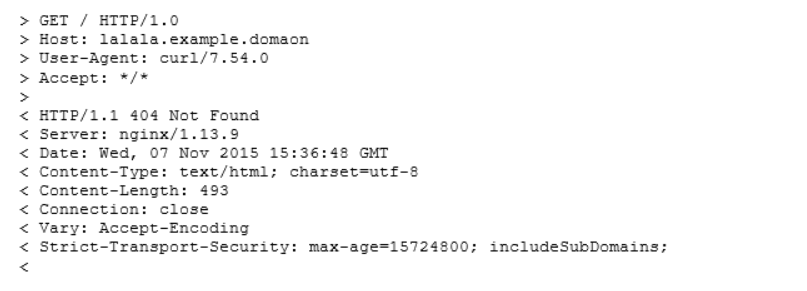

And finally, the answer. Since the service does not exist, our Ingress controller responds with 404.

Now that we have a complete overview, let’s talk about where this solution is not so optimal.

What could be better?

This stack worked very well until new requirements started coming in. In 2017 we were asked to host Apache Kafka. Kafka is used for building real-time data pipelines and streaming apps. Messages are sent and fetched over TCP. For internal cluster usage that was quite easy and we didn’t need to change anything. But we also had the requirement that services outside of the cluster need to access Kafka. As mentioned in the section above, we can only expose HTTP(S) based services. This would mean a lot of work exposing TCP based services. Assigning a NodePort and requesting a new load balancer config for each Kafka broker. Doing this for every Kafka cluster and also when scaling a cluster would end in chaos.

We also learned that the Nginx Ingress controller comes with some down sides too. The controller component will update the Nginx config file on every Ingress event. To get the config changes, Nginx needs to be reloaded every time. This means a short downtime of the service. Also, the config file got really big. At the time it was about 5MB and 50.000 lines. We feared that this will only scale to a certain point.

As already mentioned, the DNS wildcard entry is quite hacky. We wanted to get rid of this as well. The problem becomes even worse, when we want to add new DNS sub domains, which would lead to even more wildcard entries. In a perfect world, we would like to set a DNS entry for each Ingress dynamically.

We were also not happy with the handling of the hardware load balancer. Don’t get us wrong, it did a good job in the setup, but it is managed by the central network department and when we wanted to change something, we needed to request that change.

If you want to know how we solved these problems, stay tuned. Part 2 of the series will be released very soon, where we introduce our current ingress and network stack.

As always, if you have question other comments, feel free to send us a mail at peo@p7s1.net.

Cheers The PEO team.